So, an article has just come out this morning in the Chronicle of Higher Education covering the controversy over the Nowak et al Nature paper attacking kin selection. I’ve written about the paper twice previously, once here, providing an xtranormal video dramatization of the issues, and once here, trying to provide some context to explain why so many people had gotten up in arms about this particular paper (as opposed to the hundreds of scientific papers published every year that are equally wrong).

Unfortunately, the article is behind the Chronicle’s paywall, so you may not be able to read it. (I don’t know if they permit the same sorts of work-arounds that the New York Times does.)

The thing that most strikes me in the article comes at the end:

Right now Mr. Nowak is working to understand the mathematics of cancer; previously, he has outlined the mathematics of viruses. It falls within his career mission to “provide a mathematical description where there is none,” he says, a goal at once modest and lofty. He would also like to write a book on the intersection of religion and science, a publication that would no doubt further endear him to atheists.

He knows that the debate on kin selection is far from over, though he sees the ad hominem attacks as a good sign. “If the argument is now on this level,” he says, “I have won.”

along with this comment from Smayersu

Science is written in the language of mathematics. Why is it that the biologists cry “foul” when the mathematicians and physicists investigate the theory of evolution? The biological community should welcome the help of those who are trained to examine problems from a rigorous mathematical perspective.

Two things.

First, the criticism of Nowak had nothing to do with his providing a mathematical framework. In fact, most of the people who have criticized Nowak are, themselves, mathematical biologists. The issue is that the paper discounts and misrepresents a huge body of mathematical work. In fact, while Nowak has written a number of interesting and original papers, he has also written a number of papers in which he claims to “provide a mathematical description where there is none,” the problem being that in many cases, there actually is a mathematical description. Often quite an old one.

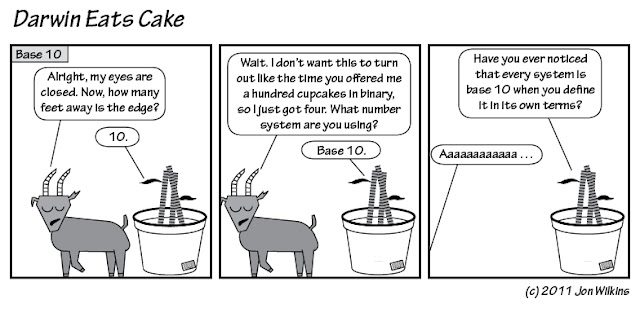

It is as if I were to write a paper that said, “You know who was wrong? Albert Einstein! Because, look, Special Relativity does not work when you incorporate gravity. So I’ve created a new thing that I call “Generalized Relativity.”

Second, it is absolutely true that ad hominem attacks do not constitute legitimate scientific criticism. However, the fact that some of the attacks on Nowak have been ad hominem certainly does not constitute evidence that he is right.

To my mind, the relevance of the ad hominem attacks is this. They reflect a deep sense of frustration on the part of the field towards Nowak and his career success. Nowak has repeatedly violated one of the basic principles of academic scholarship: that you give appropriate credit to previous work. And yet, the academic system has consistently rewarded him over other researchers who put more effort into making sure that they are doing original work and into making sure to credit their colleagues.

It is as if, after publishing my paper on Generalized Relativity, I were to be awarded tens of millions of dollars in grant money and a chair at Harvard, while the legions of physicists pointing out Einstein’s later work were ignored. I’m guessing that I might find myself the subject of some ad hominem attacks, but it would not mean that I was right.

As a colleague of mine commented this morning, “ah, Nowak thinks he’s won because of the ad hominem attacks. by that standard, Donald Trump must be a serious presidential candidate.”

Nowak, M., Tarnita, C., & Wilson, E. (2010). The evolution of eusociality Nature, 466 (7310), 1057-1062 DOI: 10.1038/nature09205